Upgrading my main file server to CentOS 8

The story

With the recent slowdown in packages for CentOS 7, I have been preparing to update at least my main file server to CentOS 8. Thankfully, when I was migrating my entire infrastructure to Linux 3+ years ago, I architected my file server with an OS drive and a data drive. And from my vast stores of old hard disks, I pulled out a drive whose record indicates that it previously was the "C:\" drive for that exact same hardware, from 2015-2017. And now it is running instance 4 on that exact same platform, a Dell Precision Workstation 490. Before I was ready to schedule the downtime window, I practiced all the possible configs on a CentOS 8 vm. I wrote down the items I wanted to make sure get set up on the new install.

- apache with exact configs

- samba with freeipa user auth

- nfs

- Plex media server

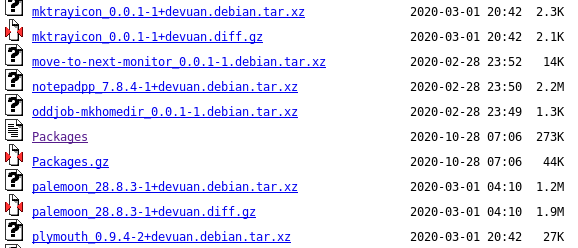

- my local mirrors of CentOS, Fedora, Devuan, and my OBS

- Master sync

- Google drive (rclone, from /root/.config)

- SpiderOakONE (from /root/.config)

- Rbup (my local backup shell script)

- All cronjobs (mostly the above tasks)

- Custom ssh config

- FreeIPA auth for ssh

- custom firewall rules

- /etc/installed directory

- custom Google Photos image sync (another rclone task)

Those are my notes before doing any work on the production system. So, after collecting my thoughts and config files and practicing on a vm, and selecting a downtime window, I shut down my file server, removed its OS drive, stuck in the new one, and installed CentOS 8 minimal. Then, I followed a bunch of steps, loosely in order, to replicate my previous setup. I had to load up my root ca cert, before I joined it to IPA, so dnf could get through my transparent proxy. I copy-pasted in the value from my workstation's /etc/ipa/ca.crt to /etc/pki/ca-trust/source/anchors/ca- ipa.example.com.crt.

sudo update-ca-certs

Then, I installed my minimal software.

sudo dnf --setopt=[install_weak_deps=False](/posts/2017/05/13/dnf-ignore-weak-dependencies/) install bgscripts-core bash-completion expect sudo dnf --setopt=install_weak_deps=False install bc bind-utils cifs-utils cryptsetup dosfstools epel-release expect firewalld git iotop mailx man mlocate net-tools nfs-utils parted python3-policycoreutils rpm-build rsync strace sysstat tcpdump telnet vim wget sudo dnf --setopt=install_weak_deps=False install screen p7zip sudo dnf remove iwl*

I mounted up my data drive's logical volumes to /var/server1. And now came time for FreeIPA! With CentOS 8, FreeIPA is now in a "module" repository and since I need ipa-server-trust-ad, I have to use the full module (DL1).

sudo dnf --setopt=install_weak_deps=False install @idm:DL1 ipa-server-trust-ad ipa-client time sudo ipa-client-install --force-join --mkhomedir --[principal=domainjoin](/posts/2020/01/15/freeipa-service-account-to-join-systems-unattended/) --password=SEEKEEPASS

I knew I would need --force-join because I was using the same hostname as before, and as is typical, I failed to remove the old host. Before logging in, I set up my user's home directory to use the data drive with a symlink.

sudo ln -s /var/server1/shares/bgstack15 /home/bgstack15

And now I could log in as my domain user! So now it was time for setting up Samba with FreeIPA auth. Instead of duplicating that content, just read the linked post. I am uncertain if I had documented this though, but I added this for good measure:

sudo setsebool -P samba_export_all_rw 1

Here is where I had forgotten my first config file. I had to plug in my old disk drive and fetch my /etc/samba/smb.conf. All my firewall configs in one fell swoop.

sudo cp -p /var/server1/shares/public/Support/Systems/server1/prep/*xml /lib/firewalld/services/ sudo firewall-cmd --reload sudo firewall-cmd --permanent --add-service=http-example --add-service=freeipa-samba --add-service=nfs-mod --add-service=plexmediaserver sudo firewall-cmd --reload

And for my nfs settings.

sudo dnf -y install nfs-utils sudo systemctl enable rpcbind nfs-server --now

I set up my /etc/exports with my main shares:

/var/server1/shares 192.168.1.0/24(rw,sync,insecure)

And then update the running exports.

sudo exportfs -a

I had to copy in my mirror files for CentOS, Fedora, etc. I don't have a blog post for this topic, surprisingly. So go search it, until I write something about it. It's mostly just rsync to a known valid mirror for each distro and a cron entry. For cron, I just copied in my archived files. And then I read them to make sure I would have everything established for the jobs. Rclone for one:

sudo dnf install rclone

I was pleased to learn that rclone 1.51.0 is now packaged by the distro. I had to use a binary release in the past. My apache setup is a little more involved. Due to architectural reasons (something screwy with Plex, if I recall correctly), I serve http on the local network on a different port.

sudo dnf install httpd sudo semanage port -a -t http_port_t -p tcp 180 sudo semanage port -a -t http_port_t -p tcp 181 sudo semanage port -a -t http_port_t -p tcp 32400 sudo semanage port -a -t http_port_t -p tcp 32401 sudo setsebool -P httpd_graceful_shutdown 1 sudo cp -pr /etc/httpd/conf.d /etc/httpd/conf.d.orig sudo tar -C /etc/httpd -zxf /var/server1/shares/public/Support/Systems/server1/prep/httpd_conf.d.2020-11-12.tgz sudo cp -pr /etc/pki/tls /etc/pki/tls.orig sudo tar -C /etc/pki -zxf /var/server1/shares/public/Support/Systems/server1/etc_pki_tls.2020-11-13.tgz sudo mv /var/www /var/www.orig sudo ln -s /var/server1/shares/public/www /var/www sudo restorecon -Rv /etc/pki sudo mv /etc/httpd/conf.d/nss.conf{,.off} # mod_nss is not here on centos8 or in my install anyway sudo systemctl enable --now httpd # or systemctl start httpd sudo setsebool -P httpd_use_nfs 1 # because we did this on old server. sudo setsebool -P httpd_unified 1 # fixes the cgi-bin operations, and required semodule --disable_dontaudit --build

I set up my local backup scripts which do not have a post on this blog yet. I set up SpiderOak by installing its rpm and expanding my tarball of /root/.config which also included the rclone config.

wget --content-disposition https://spideroak.com/release/spideroak/rpm_x64 sudo dnf install /etc/installed/SpiderOakONE.7.5.0.1.x86_64.rpm sudo tar -C /root -zxf /var/server1/shares/public/Support/Systems/server1/dot-config.2020-11-12.tgz # this also includes the rclone config

I copied in my old /etc/installed directory to my new one, underneath "server1-letterless" sub-directory. I set up my old gnupg directory, for repository signing.

sudo tar -C /root -zxf /var/server1/shares/public/Support/Systems/server1/gnupg-dir.2020-11-12.tgz

And instead of mounting the nfs exports locally, I just set up symlinks.

sudo ln -s /var/server1/shares/public /mnt/public sudo ln -s /var/server1/shares/bgstack15 /mnt/bgstack15

For X forwarding, so that the SpiderOak desktop application would work so I could check settings that were eluding me in the command line interface, I installed xauth.

sudo dnf install xauth

For Plex, I downloaded the latest rpm and installed it. I failed to record the exact filename or its link, but it's searchable. From my workstation, I opened an ssh session with port forwarding.

ssh -L 8888:localhost:32400 server1

So that I could visit localhost:8888 in a web browser, and set up Plex. I have signed my own cert for Plex and I placed it in a pkcs12 file. See hobo.house blog for instructions for that. So apparently I need yet another blog post.

# copy in /mnt/public/Support/Systems/server1/https-plex.ipa.example.com-plex.p12 to /var/lib/plexmediaserver/Library/Application\ Support/Plex\ Media\ Server/

Configure the web app to use that, and add my URL to the "custom server access URLs" field. I have some SELinux notes for Plex('s webhook in Apache). A summary:

time sudo checkmodule -M -m -o plexlog.mod /var/server1/shares/public/Support/Programs/Plex/webhooks/plexlog.te time sudo semodule_package -o plexlog.pp -m plexlog.mod time sudo semodule -i plexlog.pp sudo dnf install jq

For the debmirror, I had to enable an additional repo that is not enabled by default in yum/dnf.

sudo dnf install --enablerepo=PowerTools lzma debmirror dpkg-dev

And then add my local user for obsmirror.

sudo useradd -u 1001 obsmirror

The long tail of checking backups

And the most important thing for a file server, of course, is to ensure backups are working.

Final thoughts

So either/or samba and ipa-server-trust-ad requires libX11. So for the first time, my headless server has X11 on it. I feel kind of icky; stay tuned to this blog for updates if I can somehow remove this dependency.

I

started investigating using fully custom icons, before I realized I should

just use different colors of the extant compressed.png file!

I

started investigating using fully custom icons, before I realized I should

just use different colors of the extant compressed.png file!